|

|||||||

|

|

|

|

|

|

Strumenti |

|

|

#21 | |

|

Senior Member

Iscritto dal: Jul 2005

Messaggi: 7819

|

http://uk.theinquirer.net/?article=38011

Meanwhile, we hear Intel's discrete graphics part will be sampling in second half of next year, with some of usual suspects (Micron, Qimonda or Samsung) GDDR chips on the PCB. Release date is still somewhere between Yuletide '08 and Q1 2009. Quote:

__________________

Sample is selezionated !

|

|

|

|

|

|

|

#22 |

|

Senior Member

Iscritto dal: Jan 2001

Messaggi: 9098

|

Quanto tempo ancora... cambieranno tante cose che le news ora solo solo pro hype ovviamente.

|

|

|

|

|

|

#23 | |

|

Senior Member

Iscritto dal: Jul 2005

Messaggi: 7819

|

Quote:

http://www.tcmagazine.com/comments.p...=16064&catid=6 Giant silicon salamander Intel Corporation has just announced that it is set to buy Havok Inc, in-game physics specialist based in Dublin, Ireland. "Havok is a proven leader in physics technology for gaming and digital content, and will become a key element of Intel's visual computing and graphics efforts," said Renee J. James, Intel's VP and general manager of Software and Solutions Group. The acquisition, which still has a few legal issues to surpass, will see Havok become a wholly owned subsidiary of Intel while its software will take more 'advantage' of Intel's technology and chips. Founded in 1998, Havok has become a very popular third-party developer which has seen its software used in games like Half-Life 2, Halo 2, BioShock, TES IV Oblivion and many more. The financial details of the deal have not been disclosed but we believe Intel dug deep into its pocket for Havok.

__________________

Sample is selezionated !

|

|

|

|

|

|

|

#24 |

|

Senior Member

Iscritto dal: Aug 2007

Città: Lugano

Messaggi: 652

|

il mio sogno

Intel e nvidia Fusion chissa che razza di cpu e vcg uscirebbero! Un sogno per ora.

__________________

P5Q Deluxe Q6600(b3) 3.4 Ballistix 8500 tracer with led GtX 660TI Velociraptor Thermaltake Tai-Chi g51 G19 razer AC-1 Sennheiser Pc 333d Samsung 750d |

|

|

|

|

|

#25 |

|

Senior Member

Iscritto dal: Jul 2005

Messaggi: 7819

|

Direi che è ufficiale:

http://www.dailytech.com/Physics+Acc...rticle8896.htm Intel reaches definitive agreement to purchase physics software developer Havok Intel has announced it has signed a definitive agreement to purchase software developer Havok, Inc. Havok provides various software development tools to digital animation and game developers and is one of the largest providers for software physics. “Havok is a proven leader in physics technology for gaming and digital content, and will become a key element of Intel’s visual computing and graphics efforts,” said Renee J. James, Intel vice president and general manager of Software and Solutions Group. “This is a great fit for Havok products, customers and employees,” remarked Havok CEO David O’Meara. “Intel’s scale of technology investment and customer reach enable Havok with opportunities to grow more quickly into new market segments with new products than we could have done organically. We believe the winning combination is Havok’s technology and customer know-how with Intel’s scale. I am excited to be part of this next phase of Havok’s growth.” A recent trend is to offload physics processing to either a GPU or dedicated physics processor. So far, though, Ageia, ATI, and NVIDIA have not made much headway in the physics market. Both NVIDIA and ATI have previewed CrossFire and SLI Physics, however, neither company has delivered any actual physics hardware yet. It’s pretty interesting to note that both ATI and NVIDIA’s physics solutions rely on Havok FX. However, it is unlikely that Intel’s acquisition of Havok will affect Havok’s partnership with either AMD or NVIDIA. “Havok will operate its business as usual, which will allow them to continue developing products that are offered across all platforms in the industry,” said Renee J. James regarding the future of Havok. Essentially, Havok will operate as a subsidiary of Intel and will continue to operate as an independent business. This reinforces the belief that current partnerships will not be affected. Havok has partnerships with many of the largest names in the gaming community such as Microsoft, Sony, Nintendo, NVIDIA, and AMD. Havok has provided software physics for games like Halo 3, The Elder Scrolls IV: Oblivion, Half Life 2 and Lost Planet: Extreme Condition. In addition to providing software that adds physics realism to games, Havok also provides physics for professional software such as Autodesk’s 3DS Studio Max 9.

__________________

Sample is selezionated !

|

|

|

|

|

|

#26 |

|

Senior Member

Iscritto dal: Jul 2005

Messaggi: 7819

|

interessante articolo:

http://www.beyond3d.com/content/news/534 On October 19th, Neoptica was acquired by Intel in relation to the Larrabee project, but the news only broke on several websites in the last 2 days. We take a quick look at what Intel bought, and why, in this analysis piece. [This piece is the third in a streak of experimental content which uses lists of facts and analysis rather than prose. We believe this should be faster to both read and write, while delivering a clearer message and being easier to follow. So let's see how this goes...] Neoptica's Employees * 8 employees (including the two co-founders) according to Neoptica's official website. * 3 have a background with NVIDIA's Software Architecture group: Matt Pharr (editor of GPU Gems 2) and Craig Kolb who were also Exluna co-founders, and Geoff Berry. Tim Foley also worked there as an intern. * 2 are ex-Electronic Arts employees: Jean-Luc Duprat (who also worked at Dreamworks Feature Animation) and Paul Lalonde. * Nat Duca comes from Sony, where he led the development of the RSX tool suite and software development partnerships. * Aaron Lefohn comes from Pixar, where he worked on GPU acceleration for rendering and interactive film preview. * Pat Hanrahan also was on the technical advisory board. He used to work at Pixar, where he was the chief architect of the Renderman Interface protocol. His PhD students were also responsible for the creation of both Brook and CUDA. Neoptica's Vision * Neoptica published a technical whitepaper back in March 2007. Matt Pharr also gave a presentation at Graphics Hardware 2006 that highlights some similar points. * It explained their perspective on the limitations of current programmable shading and their vision of the future, which they name "programmable graphics". Much of their point resides on the value of 'irregular algorithms' and the GPU's inability to construct complex data structures on its own. * They argue that a faster link to the CPU is thus a key requirement, with efficient parallelism and collaboration between the two. Only the PS3 allows this today. * They further claim the capability to deliver many round-trips between the CPU and the GPU every frame could make new algorithms possible and improve efficiency. They plead for the demise of the unidirectional rendering pipeline. Neoptica's Proposed Solution * Neoptica claims to have developed a deadlock-free high-level API that abstracts the concurrency between multiple CPUs and GPUs despite being programmed in C/C++ and Cg/HLSL respectively. * These systems "deliver imagery that is impossible using the traditional hardware rendering pipeline, and deliver 10x to 50x speedups of existing GPU-only approaches." * Of course, the claimed speed-up is likely for algorithms that just don't fit the GPU's architecture, so it's more accurate to just say a traditional renderer just couldn't work like that rather than claim huge performance benefits. * Given that only the PS3 and, to a lesser extend, the XBox360 have a wide bus between the CPU and the GPU today, we would tend to believe next-generation consoles were their original intended market for this API. * Of course, Neoptica doesn't magically change these consoles' capabilities. The advantage of their solution would be to make exotic and hybrid renderers which benefit from both processors (using CELL to approximate high-quality ambient occlusion, for example) much easier to develop. Intel's Larrabee * Larrabee is in several ways (but not all) a solution looking for a problem. While Intel's upcoming architecture might have inherent advantages in the GPGPU market and parts of the workstation segment, it wouldn't be so hot as a DX10 accelerator. * In the consumer market, the short-term Larrabee strategy seems to be add value rather than try to replace traditional GPUs. This could be accomplished, for example, through physics acceleration and this ties in with the Havok acquisition. * Unlike PhysX, however, Larrabee is fully programmable through a standard ISA. This makes it possible to add more value and possibly accelerate some algorithms GPUs cannot yet handle, thus improving overall visual quality. * In the GPGPU market, things will be very different however, and there is some potential for the OpenGL workstation market too. We'll see if rumours about the latter turn out true or not. * The short-term consumer strategy seems to make Larrabee a Tri- and Quad-SLI/Crossfire competitor, rather than a real GPU competitor. But Intel's ambitions don't stop there. * While Larrabee is unlikely to excel in DX10 titles (and thus not be cost-competitive for such a market), its unique architecture does give it advantages in more exotic algorithms, ones that don't make sense in DX10 or even DX11. That (and GPGPU) is likely where Intel sees potential. Intel's Reasoning for Neoptica * Great researchers are always nice to have on your side, and Intel would probably love to have a next-generation gaming console contract. Neoptica's expertise in CPU-GPU collaboration is very valuable there. * Intel's "GPU" strategy seems to be based around reducing the importance of next-generation graphics APIs, including DX11. Their inherent advantage is greater flexibility, but their disadvantage is lower performance for current workloads. This must have made Neoptica's claims music to their ears. * Furthermore, several of Neoptica's employees have experience in offline rendering. Even if Larrabee didn't work out for real-time rendering, it might become a very attractive solution for the Pixars and Dreamworks of the world due to its combination of high performance and (nearly) infinite flexibility. * Overall, if Intel must stand a chance to conquer the world of programmable graphics in the next 5 years, they need an intuitive API that abstracts the details while making developers remember how inflexible some parts of the GPU pipeline remain both today and in DirectX 11. Neoptica's employees and expertise certainly can't hurt there. While we remain highly skeptical of Intel's short-term and mid-term prospects for Larrabee in the consumer market, the Neoptica and the Havok acquisitions seem to be splendid decisions to us, as they both potentially expand Larrabee's target market and reduce risk. In addition, there is always the possibility that as much as Intel loves the tech, they also love the instant 'street cred' in the graphics world they get from picking up that group of engineers. We look forward to the coming years as these events and many others start making their impact.

__________________

Sample is selezionated !

|

|

|

|

|

|

#27 |

|

Senior Member

Iscritto dal: Jul 2005

Messaggi: 7819

|

ancora 2 annetti...

http://www.beyond3d.com/content/news/565 When Doug Freedman asked Paul Otellini about Larrabee during yesterday's conference call, we didn't think much would come out of it. But boy were we wrong: Otellini gave an incredibly to-the-point update on the project's timeframe. So rather than try to summarize, we'll just quote what Otellini had to say here. Larrabee first silicon should be late this year in terms of samples and we’ll start playing with it and sampling it to developers and I still think we are on track for a product in late ’09, 2010 timeframe. And yes, the seekingalpha.com transcript says 'Laramie' and we have no idea how anyone could spell it that way given the pronunciation, but whatever. The first interesting point is that Otellini said 'first silicon', as if it wasn't their intention to ship it in non-negligible quantities. So it'd be little more than a prototype, which makes sense: it'll likely take quite some time for both game and GPGPU developers to get used to the architecture and programming model. At least this proves Intel isn't being ridiculously overly confident in terms of software adoption if we're interpreting that right, and that they're willing to make this a long-term investment. On the other hand, if their first real product is expected to come out in 'late 2009', an important point becomes what process Intel will manufacture it on. If it's 45nm, they'll actually be at a density and power disadvantage against GPUs produced at TSMC, based on our understanding of both companies ' processes and roadmaps. But if Intel is really aggressive and that chip is actually on 32nm, then they would be at a real process advantage. That seems less likely to us since it'd imply it would tape-out and release at about the same time as Intel's CPUs on a new process. Either way, it is a very important question. The next point to consider is that in the 2H09/1H10 timeframe, Larrabee will compete against NVIDIA and AMD's all-new DX11 architectures. This makes architectural and programming flexibility questions especially hard to answer at this point. It should be obvious that NVIDIA and AMD must want to improve those aspects to fight against Larrabee though, so it could be a very interesting fight in the GPGPU market and beyond.

__________________

Sample is selezionated !

|

|

|

|

|

|

#28 |

|

Senior Member

Iscritto dal: Jul 2005

Messaggi: 7819

|

__________________

Sample is selezionated !

|

|

|

|

|

|

#29 |

|

Senior Member

Iscritto dal: Jul 2006

Messaggi: 1568

|

in circa 2 anni???!!!

Magari fosse così!!! però credo sia un pò improbabile... |

|

|

|

|

|

#30 |

|

Senior Member

Iscritto dal: Jul 2005

Messaggi: 7819

|

ovviamente intel dice ciò che gli conviene dire. 2 anni per essere presenti sul mercato poi chissà quanto tempo ci vorrà prima che gli sviluppatori programmino bene per le sue gpu, poi imho non seguirà l'evoluzione che indicheranno le dx11 quindi penso che si concentrerà su una console e gpgpu

__________________

Sample is selezionated !

|

|

|

|

|

|

#31 |

|

Senior Member

Iscritto dal: Jul 2005

Messaggi: 7819

|

Molto interessanti le conseguenze di quest'ultima novità ( ma pare interessi solo a me

) )Fonte Project Offset In a surprising turn of events, Sir Sam McGrath - founder of Project Offset - announced yesterday on the official company website that he and his team were now part of the Intel Kingdom. Today we have some major news to announce. Intel has acquired Offset Software. Yes, you read it correctly! Project Offset is going strong and we are excited about things to come. Stay tuned. - Sam McGrath As one can see, the missive couldn’t be any more to the point, while at the same time being short on information. Information such as an answer to the question everybody has in mind when confronted with this news: Why would Intel buy a small game developer that is currently working on a promising 3D engine and game? It goes without saying that to us here at Beyond3D, this latest gaming-related purchase from Intel has Larrabee’s shadow all over it. Even though Larrabee’s architecture and scope are still shrouded in mystery, it seems clear to anyone who has followed the development of Intel’s elusive new graphics architecture that the CPU giant realised software would be a key issue for the completion of the project. Intel has already shown its commitment to purchasing talent via the acquisition of Neoptica last November. In the meantime, the company has been steadily drafting 3D software experts into its stable of 3D knights, including TomF. The inclusion of a full fledged development house is an interesting move, however. We knew that Intel was in dire need of graphics API – or at least optimised libraries - tailored to Larrabee’s specifications. But what good would such a platform be without any impressive game or large application (demo) to take advantage of it? At the same time, it is worth noting that recent pre-GDC rumours indicated that Intel was in potential talks to purchase German game developer Crytek. Now, did these talkative birds mistake Crytek for Offset Software, or is Intel still in a developer shopping mood? Well, let us wait and see.

__________________

Sample is selezionated !

|

|

|

|

|

|

#32 | |

|

Senior Member

Iscritto dal: Jan 2001

Messaggi: 9098

|

Quote:

__________________

26/07/2003 |

|

|

|

|

|

|

#33 |

|

Senior Member

Iscritto dal: Mar 2005

Messaggi: 431

|

Advanced realism through acclerated ray tracing

http://it.youtube.com/watch?v=hIdvJO...eature=related Con 50 xeon nel 2004 a 640x480 si andava a 4 fps al secondo con sto ray tracing.. con un quad core odierno si fanno 90fps circa a 1280x720, almeno così mi par di capire. Ma ditemi se sbaglio, questo ray tracing è una tecnica di illuminazione che permette di illuminare/riflettere OGNI cosa a seconda dell'oggetto, quindi ottimizzazioni a livello software c'è ne possono essere poche.. se deve comunque riflettere tutto su ogni singolo pixel.. quindi le softshadow, pesantissime su un hardware di medio livello fino a diversi mesi fa, saranno nulla in proporzione alle ombre e ai riflessi che potremmo avere in futuro Un fatto positivo è che il ray tracing sfrutta benissimo i multicore.. ma perchè dovrebbe sfruttare le CPU e non le GPU? |

|

|

|

|

|

#34 | |

|

Senior Member

Iscritto dal: Jul 2005

Messaggi: 7819

|

Quote:

Finalmente qualcosina detta da un ingegnere Intel: For many months, researchers and marketing fanatics at Intel have been heralding the upcoming 'raytracing revolution', claiming rasterisation has run out of steam. So it is refreshing to hear someone actually working on Larrabee flatly denying that raytracing will be the chip's main focus. Tom Forsyth is currently a software engineer working for Intel on Larrabee. He previously worked at Rad Game Tools on Pixomatic (a software rasterizer) and Granny3D, as well as Microprose, 3Dlabs, and most notably Muckyfoot Productions (RIP). He is well respected throughout the industry for the high quality insight on graphics programming techniques he posts on his blog. Last Friday, though, his post's subject was quite different: "I've been trying to keep quiet, but I need to get one thing very clear. Larrabee is going to render DirectX and OpenGL games through rasterisation, not through raytracing. I'm not sure how the message got so muddled. I think in our quest to just keep our heads down and get on with it, we've possibly been a bit too quiet. So some comments about exciting new rendering tech got misinterpreted as our one and only plan. [...] That has been the goal for the Larrabee team from day one, and it continues to be the primary focus of the hardware and software teams. [...] There's no doubt Larrabee is going to be the world's most awesome raytracer. It's going to be the world's most awesome chip at a lot of heavy computing tasks - that's the joy of total programmability combined with serious number-crunching power. But that is cool stuff for those that want to play with wacky tech. We're not assuming everybody in the world will do this, we're not forcing anyone to do so, and we certainly can't just do it behind their backs and expect things to work - that would be absurd." So, what does this mean actually mean for Larrabee, both technically and strategically? Look at it this way: Larrabee is a DX11 GPU with a design team that took both raytracing and GPGPU into consideration from the very start, while not forgetting performance in DX10+-class games that assume a rasteriser would be the most important factor determining the architecture's mainstream success or failure. There's a reason for our choice of phrasing: the exact same sentence would be just as accurate for NVIDIA and AMD's architectures. Case in point: NVIDIA's Analyst Day 2008 had a huge amount of the time dedicated to GPGPU, and they clearly indicated their dedication to non-rasterised rendering in the 2009-2010 timeframe. We suspect the same is true for AMD. The frequent implicit assumption that DX11 GPUs will basically be DX10 GPUs with a couple of quick changes and exposed tesselation is weak. Even if the programming model itself wasn't significantly changing (it is, with the IHVs providing significant input into direction), all current indications are that the architectures themselves will be significantly different compared to current offerings regardless, as the IHVs tackle the problem in front of them in the best way they know how, as they've always done. The industry gains new ideas and thinking, and algorithms and innovation on the software side mean target workloads change; there's nothing magical about reinventing yourself every couple of years. That's the way the industry has always worked, and those which have failed to do so are long gone. Intel is certainly coming up with an unusual architecture with Larrabee by exploiting the x86 instruction set for MIMD processing on the same core as the SIMD vector unit. And trying to achieve leading performance with barely any fixed-function unit is certainly ambitious. But fundamentally, the design principles and goals really aren't that different from those of the chips it will be competing with. It will likely be slightly more flexible than the NVIDIA and AMD alternatives, let alone by making approaches such as logarithmic rasterisation acceleration possible, but it should be clearly understood that the differences may in fact not be quite as substantial as many are currently predicting. The point is that it's not about rasterisation versus raytracing, or even x86 versus proprietary ISAs. It never was in the first place. The raytracing focus of early messaging was merely a distraction for the curious, so Intel could make some noise. Direct3D is the juggernaut, not the hardware. "First, graphics that we have all come to know and love today, I have news for you. It's coming to an end. Our multi-decade old 3D graphics rendering architecture that's based on a rasterization approach is no longer scalable and suitable for the demands of the future." That's why the message got so muddled, Tom. And no offence, Pat, but history will prove you quite wrong.

__________________

Sample is selezionated !

|

|

|

|

|

|

|

#35 |

|

Senior Member

Iscritto dal: Jul 2005

Messaggi: 7819

|

Quad Larrabee : Intel's Answer To Nvidia's Domination?

http://www.vr-zone.com/articles/Quad...n%3F/5767.html   Nvidia is counter-attacking Intel on their upcoming Larrabee GPU calling it a PowerPoint slide and facing constant delays according to a CNET interview with Jen-Hsun Huang. Most probably, the first generation Larrabee will face a tough going against the Nvidia's GT200 series and beyond. However, according to a roadmap VR-Zone has seen, the second generation Larrabee might have a chance against Nvidia's future offerings in 2010-11 with a new architecture, many more cores on 32nm process technology and Quad Larrabee cards support. Clearly, Intel's strategy here is to increase the number of cores per GPU and increase the number of Larrabee GPUs supported per platform. This is possible due to their advanced fab and process technology, an area which fabless Nvidia has little control over and has to rely on TSMC's process technology. We are eager to see the first hint of Larrabee performance on the current and future games.

__________________

Sample is selezionated !

|

|

|

|

|

|

#36 |

|

Senior Member

Iscritto dal: Jul 2005

Messaggi: 7819

|

http://news.cnet.com/8301-13924_3-10005391-64.html

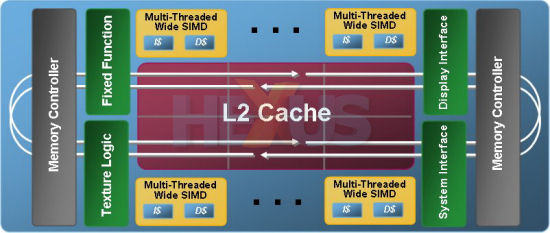

Intel has disclosed details on a chip that will compete directly with Nvidia and ATI and may take it into unchartered technological and market-segment waters. Larrabee will be a stand-alone chip, meaning it will be very different than the low-end--but widely used--integrated graphics that Intel now offers as part of the silicon that accompanies its processors. And Larrabee will be based on the universal Intel x86 architecture. The first Larrabee product will be "targeted at the personal computer market," according to Intel. This means the PC gaming market--putting Nvidia and AMD-ATI directly into Intel's sights. Nvidia and AMD-ATI currently dominate the market for "discrete" or stand-alone graphics processing units.  As Intel sees it, Larrabee combines the best attributes of a central processing unit (CPU) with a graphics processor. "The thing we need is an architecture that combines the full programmability of the CPU with the kinds of parallelism and other special capabilities of graphics processors. And that architecture is Larrabee," Larry Seiler, a senior principal engineer in Intel's Visual Computing Group, said at a briefing on Larrabee in San Francisco last week. "It is not a GPU as many have mistakenly described it, but it can do most graphics functions," Jon Peddie of Jon Peddie Research, said in an article he posted Friday about Larrabee. "It looks like a GPU and acts like a GPU but actually what it's doing is introducing a large number of x86 cores into your PC," said Intel spokesperson Nick Knupffer. Intel describes it in a statement as "the industry's first many-core x86 Intel architecture." Intel currently offers quad-core processors and will offer eight-core processors based on its Nehalem architecture, but Larrabee is expected to have dozens of cores and, later, possibly hundreds. The number of cores in each Larrabee chip may vary, according to market segment. Intel showed a slide with core counts ranging from 8 to 48. The individual cores in Larrabee are derived from the Intel Pentium processor and "then we added 64-bit instructions and multi-threading," Seiler said. Each core has 256 kilobytes of level-2 cache allowing the size of the cache to scale with the total number of cores, Seiler said. Application programming interfaces (APIs) such as Microsoft's DirectX and Apple's Open CL can be tapped, Seiler said. "Larrabee does not require a special API. Larrabee will excel on standard graphics APIs," he said. "So existing games will be able to run on Larrabee products." So, what is Larrabee's market potential? Today, the graphics chip market is approaching 400 million units a year and has consolidated into a handful of suppliers. "And of that population, two suppliers, ATI and Nvidia, own 98 percent of the discrete GPU business." according to Peddie. "And the trend line indicates a flattening to decline in the business...However, Intel is no light-weight start up, and to enter the market today a company has to have a major infrastructure, deep IP (intellectual property), and marketing prowess--Intel has all that and more," Peddie said.  Though more details will be provided at Siggraph 2008, some key Larrabee features: Larrabee programming model: supports a variety of highly parallel applications, including those that use irregular data structures. This enables development of graphics APIs, rapid innovation of new graphics algorithms, and true general purpose computation on the graphics processor with established PC software development tools. Software-based scheduling: Larrabee features task scheduling which is performed entirely with software, rather than in fixed function logic. Therefore rendering pipelines and other complex software systems can adjust their resource scheduling based each workload's unique computing demand. Execution threads: Larrabee architecture supports four execution threads per core with separate register sets per thread. This allows the use of a simple efficient in-order pipeline, but retains many of the latency-hiding benefits of more complex out-of-order pipelines when running highly parallel applications. Ring network: Larrabee uses a 1024 bits-wide, bi-directional ring network (i.e., 512 bits in each direction) to allow agents to communicate with each other in low latency manner resulting in super fast communication between cores. "A key characteristic of this vector processor is a property we call being vector complete...You can run 16 pixels in parallel, 16 vertices in parallel, or 16 more general program indications in parallel," Seiler said.

__________________

Sample is selezionated !

|

|

|

|

|

|

#37 |

|

Senior Member

Iscritto dal: Jul 2005

Messaggi: 7819

|

L'articolo di Anand Tech: http://anandtech.com/cpuchipsets/int...spx?i=3367&p=1

__________________

Sample is selezionated !

|

|

|

|

|

|

#38 |

|

Senior Member

Iscritto dal: Jan 2001

Messaggi: 9098

|

Davvero interessante come articolo e l'aspetto architetturale innovativo lascia ben pensare, sebbene potrebbe rivelarsi un progetto molto piu' nebbioso e di lunga durata di quello che si era pensato inizialmente. Poco arrosto e molto vapore intendo.

Anche il target nell'utilizzo di queste ipotetiche soluzioni in campo discreto potrebbe essere molto basso, cosi' come ipotizzato da anand stesso.

__________________

26/07/2003 |

|

|

|

|

|

#39 | |

|

Senior Member

Iscritto dal: Jul 2005

Messaggi: 7819

|

Quote:

Notare però come scala bene al salire del numero di core:  Facendo la cpu/gpu in casa Intel avrà senz'altro vantaggi ma non avrà gli half-node che TSMC può fare. Spero che con i 32nm nel 2010 faccia un bel salto . Da notare :  Ora mi domando se nVidia con la gpu DX11 passerà ad un architettura VLIW. Edit: Da notare anche il ring bus di Intel

__________________

Sample is selezionated !

Ultima modifica di Foglia Morta : 04-08-2008 alle 08:56. |

|

|

|

|

|

|

#40 |

|

Senior Member

Iscritto dal: Jul 2005

Messaggi: 7819

|

Escono altri articoli:

http://www.heise.de/newsticker/Intel...meldung/113739 http://www.pcper.com/article.php?aid=602 http://techgage.com/article/intel_op...about_larrabee http://www.hexus.net/content/item.php?item=14757

__________________

Sample is selezionated !

|

|

|

|

|

| Strumenti | |

|

|

Tutti gli orari sono GMT +1. Ora sono le: 06:28.